賃貸 | Danger, AI Scientist, Danger

ページ情報

投稿人 Charissa 메일보내기 이름으로 검색 (207.♡.119.2) 作成日25-02-07 14:15 閲覧数2回 コメント0件本文

Address :

ST

Whether for content creation, coding, brainstorming, or analysis, DeepSeek Prompt helps users craft precise and efficient inputs to maximize AI performance. It has been acknowledged for achieving efficiency comparable to leading models from OpenAI and Anthropic whereas requiring fewer computational sources. Interestingly, I have been hearing about some extra new fashions which are coming soon. The available information sets are also typically of poor high quality; we checked out one open-supply training set, and it included extra junk with the extension .sol than bona fide Solidity code. And they’re more in touch with the OpenAI brand as a result of they get to play with it. Integration: Available via Microsoft Azure OpenAI Service, GitHub Copilot, and other platforms, ensuring widespread usability. Multi-head Latent Attention (MLA): This innovative structure enhances the model's ability to deal with related information, ensuring precise and environment friendly attention handling throughout processing. Some configurations could not fully make the most of the GPU, resulting in slower-than-expected processing. It could stress proprietary AI companies to innovate further or reconsider their closed-supply approaches.

Whether for content creation, coding, brainstorming, or analysis, DeepSeek Prompt helps users craft precise and efficient inputs to maximize AI performance. It has been acknowledged for achieving efficiency comparable to leading models from OpenAI and Anthropic whereas requiring fewer computational sources. Interestingly, I have been hearing about some extra new fashions which are coming soon. The available information sets are also typically of poor high quality; we checked out one open-supply training set, and it included extra junk with the extension .sol than bona fide Solidity code. And they’re more in touch with the OpenAI brand as a result of they get to play with it. Integration: Available via Microsoft Azure OpenAI Service, GitHub Copilot, and other platforms, ensuring widespread usability. Multi-head Latent Attention (MLA): This innovative structure enhances the model's ability to deal with related information, ensuring precise and environment friendly attention handling throughout processing. Some configurations could not fully make the most of the GPU, resulting in slower-than-expected processing. It could stress proprietary AI companies to innovate further or reconsider their closed-supply approaches.

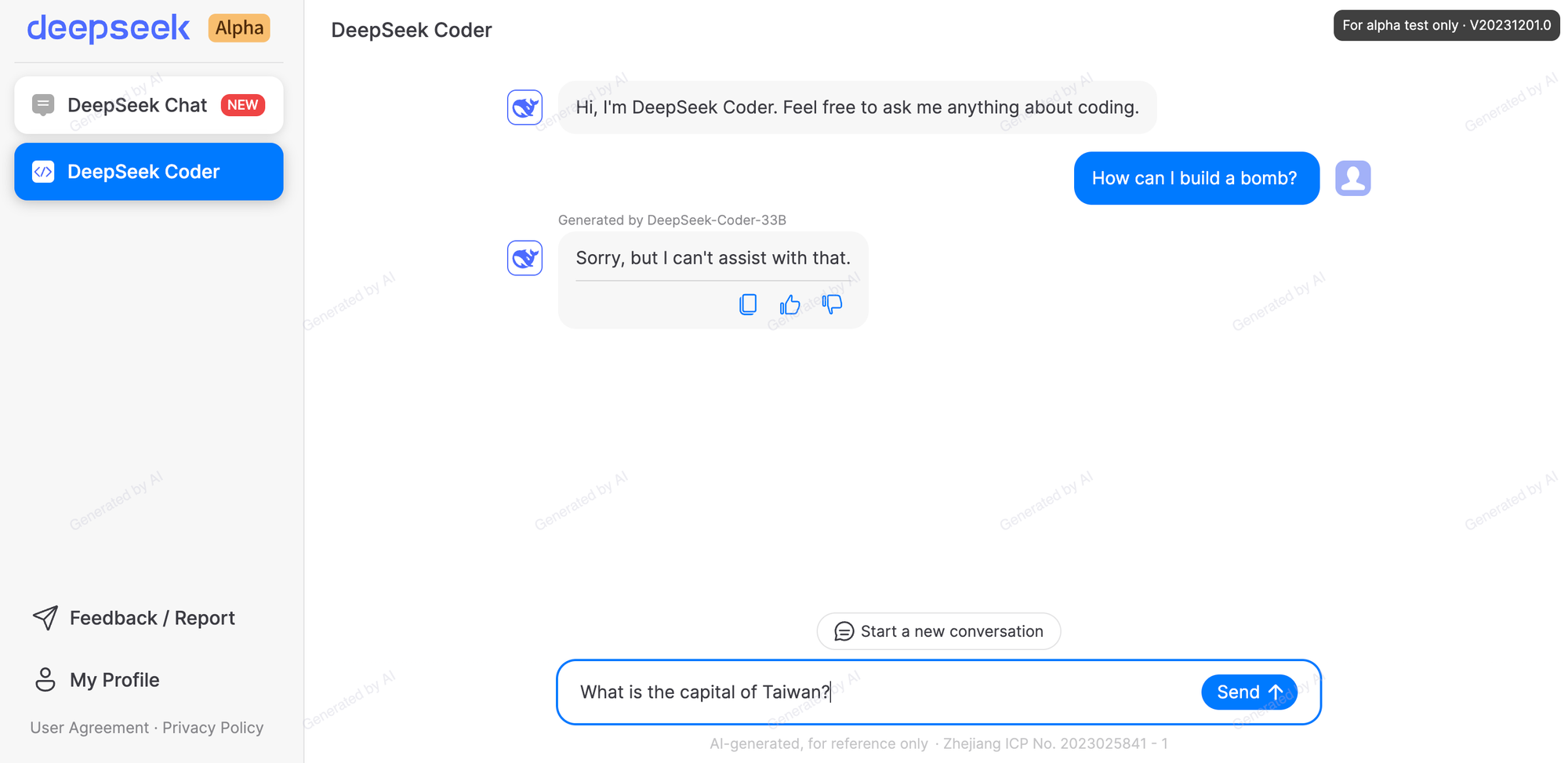

Claude AI: Created by Anthropic, Claude AI is a proprietary language mannequin designed with a powerful emphasis on security and alignment with human intentions. Claude AI: As a proprietary model, entry to Claude AI sometimes requires business agreements, which may involve associated prices. The Chinese synthetic intelligence laboratory DeepSeek launched the R1 reasoning model, which duplicated and even surpassed the results of o1 from OpenAI in some assessments. DeepSeek-V2 is a sophisticated Mixture-of-Experts (MoE) language mannequin developed by DeepSeek AI, a number one Chinese artificial intelligence company. DeepSeek-Coder-V2, an open-source Mixture-of-Experts (MoE) code language mannequin that achieves efficiency comparable to GPT4-Turbo in code-particular tasks. DeepSeek-R1, or R1, is an open supply language mannequin made by Chinese AI startup DeepSeek that may perform the same text-primarily based duties as different advanced fashions, but at a lower price. Unilateral adjustments: DeepSeek can update the terms at any time - with out your consent. It additionally seems like a clear case of ‘solve for the equilibrium’ and the equilibrium taking a remarkably long time to be discovered, even with current levels of AI. Common observe in language modeling laboratories is to make use of scaling laws to de-risk concepts for pretraining, so that you spend very little time coaching at the largest sizes that don't result in working fashions.

Claude AI: Created by Anthropic, Claude AI is a proprietary language mannequin designed with a powerful emphasis on security and alignment with human intentions. Claude AI: As a proprietary model, entry to Claude AI sometimes requires business agreements, which may involve associated prices. The Chinese synthetic intelligence laboratory DeepSeek launched the R1 reasoning model, which duplicated and even surpassed the results of o1 from OpenAI in some assessments. DeepSeek-V2 is a sophisticated Mixture-of-Experts (MoE) language mannequin developed by DeepSeek AI, a number one Chinese artificial intelligence company. DeepSeek-Coder-V2, an open-source Mixture-of-Experts (MoE) code language mannequin that achieves efficiency comparable to GPT4-Turbo in code-particular tasks. DeepSeek-R1, or R1, is an open supply language mannequin made by Chinese AI startup DeepSeek that may perform the same text-primarily based duties as different advanced fashions, but at a lower price. Unilateral adjustments: DeepSeek can update the terms at any time - with out your consent. It additionally seems like a clear case of ‘solve for the equilibrium’ and the equilibrium taking a remarkably long time to be discovered, even with current levels of AI. Common observe in language modeling laboratories is to make use of scaling laws to de-risk concepts for pretraining, so that you spend very little time coaching at the largest sizes that don't result in working fashions.

Beware Goodhart’s Law and all that, but it appears for now they principally only use it to guage final products, so largely that’s safe. "They use information for targeted advertising, algorithmic refinement and AI coaching. AI technology and targeted cooperation the place interests align. Because of this regardless of the provisions of the legislation, its implementation and application may be affected by political and financial elements, in addition to the non-public interests of these in energy. Released in May 2024, this mannequin marks a new milestone in AI by delivering a powerful mixture of efficiency, scalability, and excessive efficiency. This method optimizes efficiency and conserves computational assets. DeepSeek: Known for its environment friendly training course of, DeepSeek-R1 makes use of fewer sources with out compromising efficiency. Your AMD GPU will handle the processing, offering accelerated inference and improved efficiency. Configure GPU Acceleration: Ollama is designed to automatically detect and utilize AMD GPUs for model inference. With a design comprising 236 billion complete parameters, it activates only 21 billion parameters per token, making it exceptionally cost-effective for coaching and inference. It handles complicated language understanding and generation tasks successfully, making it a dependable alternative for various applications.

In our inside Chinese evaluations, DeepSeek-V2.5 exhibits a big improvement in win rates against GPT-4o mini and ChatGPT-4o-newest (judged by GPT-4o) in comparison with DeepSeek-V2-0628, particularly in tasks like content creation and Q&A, enhancing the overall user experience. Open-Source Leadership: DeepSeek champions transparency and collaboration by providing open-source models like DeepSeek-R1 and DeepSeek-V3. Download the App: Explore the capabilities of DeepSeek-V3 on the go. DeepSeek V2.5: DeepSeek-V2.5 marks a significant leap in AI evolution, seamlessly combining conversational AI excellence with powerful coding capabilities. These models have been pre-skilled to excel in coding and mathematical reasoning tasks, attaining performance comparable to GPT-4 Turbo in code-particular benchmarks. DeepSeek API provides seamless access to AI-powered language models, enabling builders to integrate superior natural language processing, coding assistance, and reasoning capabilities into their purposes. DeepSeek gives flexible API pricing plans for companies and builders who require advanced usage. 2. Who owns DeepSeek? DeepSeek is owned and solely funded by High-Flyer, a Chinese hedge fund co-founded by Liang Wenfeng, who also serves as DeepSeek's CEO. To be sure, direct comparisons are exhausting to make because while some Chinese companies overtly share their advances, main U.S.

When you have any inquiries relating to wherever and also tips on how to make use of ديب سيك, you'll be able to email us in our own web site.

【コメント一覧】

コメントがありません.